reader comments

66 with 39 posters participating, including story author-

We used an EVGA Dark board for testing the i9-10980XE. Most boards this far on the high end look like they escaped from Las Vegas—this one goes for a quiet, clean aesthetic instead.Jim Salter

-

Although the EVGA Dark board eschews the bling-bling, it doesn't stint on chipset cooling. Here we see a cooling fan built into the first PCIe slot—a nice touch.Jim Salter

-

Ignore the photographer reflected in the powered-down NZXT Kraken x62 cooler—the focus here should be on the tiny chipset fans to the right.Jim Salter

-

We aren't big fans of bling, but we have to admit to getting some guilty pleasure out of watching the Kraken x62's LED rings slowly spinning around.Jim Salter

Intel's new i9-10980XE, debuting on the same day as AMD's new Threadripper line, occupies a strange market segment: the "budget high-end desktop." Its 18 cores and 36 threads sound pretty exciting compared to Intel's top-end gaming CPU, the i9-9900KS—but they pale in comparison to Threadripper 3970x's 32 cores and 64 threads. Making things worse, despite having more than double the cores, i9-10980XE has trouble differentiating itself even from the much less expensive i9-9900KS in many benchmarks.

This leaves the new part falling back on what it does have going for it—cost, both initial and operational. If you can't use the full performance output of a Threadripper, the i9-10980XE will give you roughly half the performance for roughly half of the cost, and it extends that savings into ongoing electrical costs as well.

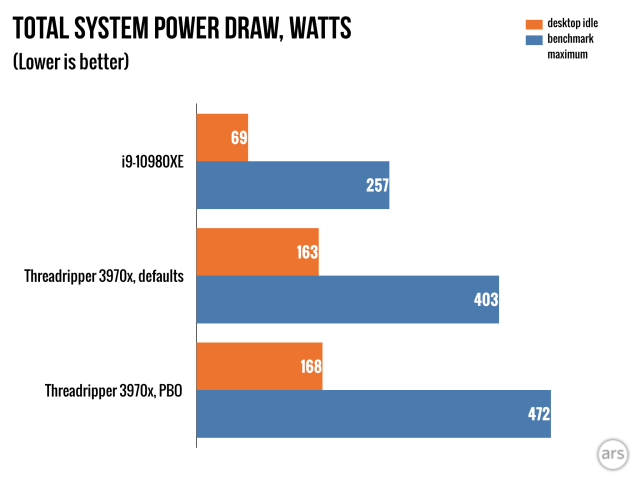

Power

Our i9-10980XE test rig was a lot easier to share an office with than the competing Threadripper 3970x rig. Its EVGA X399 Dark motherboard didn't make it look like a scene from Poltergeist was playing out in the office, and it drew a lot less power and threw off a lot less palpable heat.

To be completely fair, some of the Threadripper rig's obnoxiousness probably could have been mitigated with motherboard settings—our NZXT Kraken x62 cooler's fans were in full-on leafblower mode the entire time the Threadripper was running, even while idling. While the Threadripper system did idle at an eye-watering 163W to the i9-10980XE system's 69W, that's not enough to explain the difference in fan RPMs. The i9-10980XE system didn't spin the fans up to obnoxious levels even under its full 257W benchmarking load.

No matter how many excuses we make for the Threadripper based on its ROG motherboard's aggressive default fan settings, though, we're still looking at 69W vs 163W system draw at desktop idle and 257W vs 403W system draw under full load. In the current HEDT segment, the i9-10980XE is definitely the more frugal part to live with—particularly for those of us who live in Southern climes where July starts sometime in April and doesn't end until late October.

Performance

-

Yes, i9-10980XE fell drastically behind Threadripper 3970x. But more importantly, it didn't test as well as the mean of 115 published benchmarks of last year's i9-9980XE. It wasn't much faster than the considerably cheaper i9-9900KS, either.Jim Salter

-

We saw considerably lower single-core Passmark results on 10980XE than previous 9980XE tests, also.Jim Salter

-

The i9-10980 came in roughly even with the i9-9980 on Cinebench R20, well within margin of error.Jim Salter

-

If you don't need maximum performance, the i9-10980XE is a more frugal choice than Threadripper 3970X. A little more than half the performance, at exactly half the price—and considerably lower power draw to go along with it.Jim Salter

| Specs at a glance: Core i9-10980XE, as tested | |

|---|---|

| OS | Windows 10 Professional |

| CPU | 3.0GHz 18-core Intel Core i9-10980XE (4.7GHz boost) with 24.75MB smart cache—expected retail $1,000 |

| RAM | 16GB HyperX Fury DDR4 3200—$88 at Amazon |

| GPU | MSI Geforce RTX 2060 Super Ventus—$420 at Amazon |

| HDD | Samsung 860 Pro 1TB SSD—$275 at Amazon |

| Motherboard | EVGA X299 Dark—$415 at Amazon |

| Cooling | NZXT Kraken X62 fluid cooler with 280mm radiator—$140 at Amazon |

| PSU | EVGA 850GQ Semi Modular PSU—$130 at Amazon |

| Chassis | Praxis Wetbench test chassis—$200 at Amazon |

| Price as tested | ≈$2,668 |

If you haven't spent the entirety of 2019 under a rock, it shouldn't be any surprise that Intel's i9-10980XE comes up short when compared to AMD's Threadripper 3970x, which also released this Monday. The i9-10980XE is an 18-core, 36-thread part to the Threadripper's 32 cores and 64 threads, and AMD's 7nm process has ended any offsetting performance-per-core advantages Intel once had.

What's more surprising is that the i9-10980XE fell generally short of its elder brother, last year's i9-9980XE. Its best general-purpose benchmarking result—Cinebench R20—placed it within the margin of error of the older part. Worse, both single-threaded and multi-threaded Passmark ratings strongly favored the older chip.

We also compared the i9-10980XE to its gaming sibling, the i9-9900KS. Although the i9-10980XE scored significantly higher in Cinebench R20, it didn't score significantly better in the more general-purpose Passmark test, despite having more than twice the cores—and costing twice as much. Meanwhile, in single-threaded Passmark, the 9900KS was 39% faster—and once again, the i9-10980XE fell behind its older sibling, the i9-9980XE.

Then again—following a pattern we're all learning to recognize—the i9-10980XE is half the cost of the i9-9980XE, which sits in $2,000 territory alongside the much more powerful Threadripper 3970x. We feel sorry for any retailers sitting on existing purchased i9-9980XE stock, because while the older part is slightly more performant for general-purpose workloads, the difference certainly isn't worth double the cost.

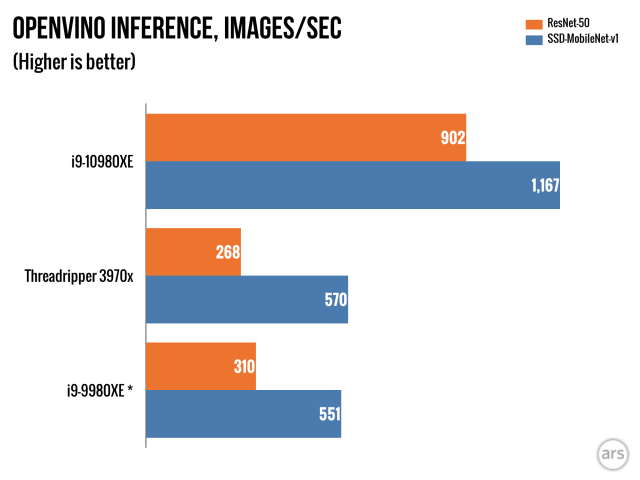

AI inference workloads

Artificial Intelligence workloads are the one place the i9-10980XE shines in its own right. Intel has been investing a lot of engineering effort into AI workload optimization, and the i9-10980XE features its Deep Learning Boost x86 extension instructions. With compilers that can take advantage of the new instruction set, Intel has been telling us that AI workloads can easily double their throughput.

We ran AIXPRT's reference benchmarking workloads to test this claim. Sure enough, the i9-10980 more than doubled the otherwise more-performant i9-9980's throughput in OpenVINO image recognition work. It's worth noting that OpenVINO itself is an Intel initiative, and it's unlikely to be as optimized for AMD processors as Intel. This lack of optimization can explain some of the performance delta between the Threadripper 3970x and the i9-10980XE, but it's difficult to come up with anything but Deep Learning Boost as the rationale for blowing the doors off Intel's own i9-9980XE.

And yes, inference workload performance on CPUs really does matter. To explain why, let's first make sure we're clear on some basic terminology. A neural network can be operated in one of two modes—training or inference. In training mode, the neural network is essentially doing a drunkard's walk through a problem space, adjusting values and weights until it has "learned" the best way to navigate that problem space. Inference mode is much lighter weight; instead of having to traverse the entire space repeatedly, the neural network just examines one problem within the space and gives you its best answer, based on what it has learned during training.

When doing significant amounts of training, you want a high-end GPU (or a bank of high-end GPUs), full stop. They can easily outperform general-purpose CPUs by an order of magnitude or more, saving both training time and operational power. Inference, however, is a different story—although the GPU may still significantly outperform a CPU, if the CPU's operational throughput and latency suffice for real-time interaction, it's much more convenient to let it do the work.

Running inference workloads on general-purpose CPUs makes it possible to widely deploy them as tools that can be used in the field, on relatively generic hardware, and without the need for an always-on internet connection. This can also alleviate both privacy and latency concerns involved in sending data off to the cloud for remote inference processing.

Some examples of real-world, modern AI inference processing include voice recognition, image recognition, and pattern analysis. While much of this is currently being done in the cloud, we expect increasing demand for local processing capabilities. Digital personal assistants such as Cortana are one obvious application, but AI can go even further than that.

Right now, the Insider edition of Office365 allows you to type "what was the highest selling product in Q4?" into a text box and immediately have the appropriate chart produced for you... but that's only if you're willing to offload your data to the cloud. Without local inference processing chops, working with confidential data that can't leave the site where it's produced will feel more and more restrictive. Intel's betting a lot of its future on recognizing that fact—and being at the head of the pack when the rest of us do, too.

Listing image by Jim Salter

https://ift.tt/33ptax8

No comments:

Post a Comment